WAS V6 - security hardening

Overview

There are four basic approaches to attacking a J2EE-based system:

- Network-based attacks

Rely on low-level access to network packets and attempt to harm the system by altering this traffic or discovering information from these packets.

- Machine-based attacks

The intruder has access to a machine on which WAS (WAS) is running. The goal is to limit the ability to damage the configuration or to see things that shouldn't be seen.

- Application-based external attacks

An intruder uses application-level protocols (HTTP, IIOP, JMX. Web services, and so on) to access the application, perhaps via a Web browser or some other client type.The intruder uses this access to try to circumvent the normal application usage and to do inappropriate things. The key is that the attack is executed using well-defined APIs and protocols. The intruder is not necessarily outside the company, but he is executing code from outside of the application itself. These types of attacks are the most dangerous because they usually require the least skill and can be done from a great distance as long as IP connectivity is available.

- Application-based internal attacks

Multiple applications share the same WAS infrastructure, and we do not completely trust each application. While perfect security is unachievable here, there are techniques that can limit how much damage each application can do. Internal attacks are normally an indicator of untrusted code that is "evil." Generally, that is the concern here. But always remember that a hacker might compromise an application remotely (via the previous techniques), and then leverage internal attack methods to do even more harm.

We will not be considering Denial of Service (DoS) attacks. Preventing DoS attacks require different techniques that go beyond what an application server can provide. Consider network traffic monitors, rate limiters, intrusion detection tools, and more.

- Infrastructure

Actions that can be taken to configure the WAS infrastructure for maximum security. These are typically done once, when the infrastructure is built out and involve only the system administrators.

- Application configuration

Actions that can be taken by application developers and administrators and are visible during the deployment process. Essentially, these are application design and implementation decisions that are visible to the WAS administrator and are verifiable as part of the deployment process. This section will have a large number of techniques, further reinforcing the point that security is not a bolt-on; security is the responsibility of every person involved in the application design, development, and deployment.

- Application design and implementation

Actions that are taken by developers and designers during development that are crucial to security but may be difficult to detect as part of the deployment process.

- Application isolation

This is explicitly discussed separately because of the complexity of the issues involved.

Within each section, we order the various techniques by priority. Prioritization is, of course, subjective. We've attempted to prioritize threats within each area using roughly this method:

- Machine-based threats are less likely than network threats because access to the machine in production is usually restricted. If this isn't the case in your environment, then these threats become very likely. You need to restrict access to those machines.

- The most serious attacks are performed remotely using only IP connectivity. This implies that all communication must be authenticated.

- Traffic should be encrypted to protect it, but encryption of WAS internal traffic is less important than encryption of traffic that travels "beyond WAS." This is because the network traversed may have more points, where an attacker can snoop traffic.

SSL is used to protect the following kinds of traffic:

- HTTP

- IIOP

- LDAP

- SOAP

SSL requires the use of public/private key pairs, and in the case of WAS, these keys are stored in key stores.

Public Key Cryptography is fundamentally based upon a public/private key pair. These two keys are related cryptographically. The important point is that the keys are asymmetric -- information encrypted with one key can be decrypted using the other key. The private key is, well, private. That is, always protect that private key. Should anyone else ever gain access to the private key, they can then use it as "proof" of identity and act as you. It's like a password, only more difficult to change. Possessing the private key is proof of identity. The public key is the part of the key pair that can be shared with others.

If there was a secure way to distribute public keys to trusted parties, that would be enough. However, Public Key Cryptography takes things a step further and introduces the idea of signed public keys. A signed public key has a digital signature (quite analogous to a human signature) that states that the signer vouches for the public key. The signer is assuring that the party that possesses the private key corresponding to the signed public key is the party identified by the key. These signed public keys are called certificates. Well-known signers are called Certificate Authorities (CA). It is also possible to sign a public key using itself. These are known as self-signed certificates. These self-signed certificates are no less secure than certificates signed by a certificate authority. They are just harder to manage, as we'll see in a moment.

Figure 1 shows the basic process of creating a certificate using a CA and distributing it, in this case, to perform server authentication with SSL. That is, the server possesses a certificate that it uses to identify itself to the client. The client does not have a certificate and is, therefore, anonymous to SSL.

When looking at this picture, take note that the client must possess the certificate that signed the generated public key of the server. This is the crucial part of trust. Since the client trusts any certificate it has (which in this case includes the CA certificate), it trusts certificates that the CA has signed. It's worth noting that if you were to use self-signed certificates, you would need to distribute manually the self-signed certificate to each client, rather than relying on a well-known CA certificate that is likely already built into the client. This is no less secure, but if you have many clients, it is much harder to manage distributing all of those signing certificates (one for each server) to all clients. It's much easier to distribute just one CA certificate that signs many certificates. That's pretty much it for server authentication using SSL. After the authentication, SSL will actually use secret key encryption to secure the channel, but the details of that aren't relevant to this discussion.

When a client authenticates itself to a server, the process is similar although the roles are reversed. In order for a server to authenticate a client (this is often called client certificate authentication), the client must possess a private key and corresponding certificate, while the server must possess the corresponding signing certificate. That's really all there is to it. Notice what wasn't required. SSL certificate authentication merely determines that the certificate is valid, not who the certificate represents. That is the responsibility of later, post-SSL processing. This has significant implications as we'll see in a moment.

In summary, because SSL uses certificate authentication, each side of the SSL connection must possess the appropriate keys in a key store file. Whenever you configure SSL key stores, think about the fundamental rules about which party needs which keys. Usually, that will tell you what we need.

A powerful SSL trick

Once SSL validates the certificate, the authentication process is over from SSL's perspective. What ideally should happen next is that another component will look at the identity in the certificate, and then use that identity to make an authorization decision. That authorization decision could be the client deciding the server is trusted (Web browsers do this when verifying that the name in the certificate is the same as the Web server's hostname) or the server extracting the user's name and then using that to create credentials for future authorization decisions (this is what WAS does when end user's authenticate). Unfortunately, not all systems have that capability. This is where we can take advantage of a popular SSL trick: limiting the valid certificates.

Notice that in the previous scenario involving client authentication, the client presents a certificate that is validated by the server against the set of trusted certificates. Once it is validated, the SSL handshake completes. If we limit the signers we trust on the server, we can limit who can even complete that SSL handshake. In the extreme with self-signed certificates, we can create a situation where there is only one signer: the self-signed certificate. This means that there is only one valid client side private key that can be used to connect to this SSL endpoint -- the private key we generated when we created the self-signed certificate in the first place. This is how we can easily limit who can even connect to a system over SSL, even if the server side components don't provide authorization. We can think of this as creating a secure trusted tunnel at the network layer. Assuming we've configured everything correctly, only special trusted clients can even be connecting over this transport. That's very useful in several situations in WAS, which we will discuss later.

Manage SSL

WAS manages keys in key store files. There are two types of key files: key stores and trust stores. A trust store is nothing more than a key store that, by convention, contains only trusted signers. Thus, you should place CA certificates and other signing certificates in a trust store and private information (personal certificates with private keys) in the key store.

Most of WAS uses the new Java-defined key store format known as Java Key Stores (JKS). The IBM HTTP Server and the WAS Web server plug-in use an older key format known as the KDB format (or more correctly, the CMS format). The two formats are similar in function, but are incompatible in format. Be careful not to mix them up.

IBM provides a tool known as ikeyman for managing key stores. It is a very simple tool that creates key stores, generates self-signed certificates, imports and exports keys, and generates certificate requests for a CA. Use this tool when managing key stores.

WAS SSL configurations

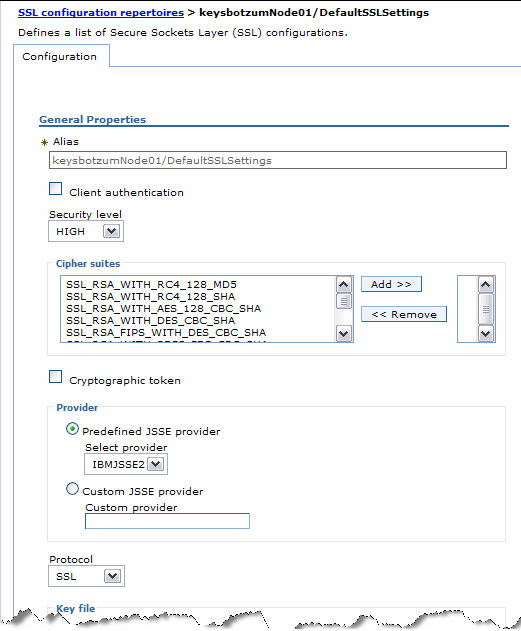

The WAS server code uses SSL configurations whenever an SSL based transport is used. This means that HTTPS, IIOP/SSL, SSL channels, and LDAP/SSL all share common code for managing SSL configuration. SSL configurations are managed from the global security panel in the SSL area. When you click there, you'll see a list of SSL configurations defined for the cell. We can add you own SSL configurations for new uses or modify the existing configurations.

The key items of interest here are:

- Client authentication

Setting this informs WAS that inbound requests should be authenticated using client certificate authentication. This means that the client will be required to present a certificate and that it will be validated against the signers specified in the configured trust store. Whether anything is done with the identity depends on the protocol. In many situations, WAS does nothing with the identity in the certificate, which is why the SSL trick we mentioned earlier is so helpful.

- Security level

You should set this to HIGH (this is the default) to limit the acceptable ciphers unless you have a good reason not to. If you wish, we can use the pick list to specify precisely the ciphers to use, but this isn't usually necessary.

The lower half of the SSL panel lets you specify the key store file and trust store file. In this case, you'll need to specify the file system location (which must be the same on every server), the key file password (which is used by the server to decrypt the file), and the key file format. WAS supports several standard formats, but JKS is the default. The key file contains the private keys, while the trust file contains the signing keys.

Infrastructure-based preventative measures

Here is a standard WAS topology with network links and protocols.

- H = HTTP traffic

- Usage: Browser to Web server, Web server to app server, and admin Web client.

- Firewall friendly.

- W = WAS internal communication

- Usage: Admin clients and WAS internal server admin traffic. Note that WAS internal communication uses one of several protocols:

- RMI/IIOP or SOAP/HTTP: Admin client protocol is configurable.

- File transfer service (dmgr to node agent): Uses HTTP(S).

- DCS (Distributed Consistency Service) RMM (Reliable Multicast Messaging): Uses private protocol. Used by memory to memory replication, stateful session EJBs, dynacache, and the high availability manager.

- SOAP/HTTP firewall friendly. DCS can be firewall friendly.

- I = RMI/IIOP communication

- Usage: EJB clients (standalone and Web container).

- Generally firewall hostile because of dynamic ports and embedded IP addresses (which can interfere with firewalls that perform Network Address Translation).

- M = SIB messaging protocol

- Usage: JMS client to messaging engine.

- Protocol: Proprietary

- Firewall friendly as can fix ports used. Likely NAT firewall hostile.

- MQ = MQ protocol

- Usage: WebSphere MQ clients (true clients and application servers).

- Protocol: Proprietary

- Firewall feasible (there are a number of ports to consider). See WebSphere MQ support pac MA86.

- L = LDAP communication

- Usage: WAS verification of user information in registry.

- Protocol: TCP stream formatted as defined in LDAP RFC

- Firewall friendly

- J = JDBC database communication via vendor JDBC drivers

- S = SOAP

- Usage: SOAP clients

- Protocol: Generally SOAP/HTTP

- Firewall friendly when SOAP/HTTP.

As Figure 4 shows, a typical WAS configuration has a number of network links. It is important to protect traffic on each of those links as much as possible to stop intruders. In the remainder of this section, we will discuss the steps required to secure the infrastructure just described.

1. Put the Web server in the DMZ without WAS

There are three fundamental principles of a DMZ that we need to consider:

- Inbound network traffic from outside must be terminated in the DMZ. A network transparent load balancer, such as Network Dispatcher, doesn't meet that requirement alone.

- The type of traffic and number of open ports from the DMZ to the intranet must be limited.

- Components running in the DMZ must be hardened and follow the principle of least function and low complexity.

In a typical DMZ configuration, there is an outer firewall, the DMZ network containing as little as possible, and an inner firewall protecting the production network.

Thus, it is normal to place the Web server in the DMZ and the WAS application servers inside the inner firewall. This is ideal, as the Web server machine then can have a simple configuration and can require little software. It also serves as a point in the DMZ that terminates inbound requests. And finally, the only port that must be opened on the inner firewall is the HTTP(S) port for the target application servers. These steps make the DMZ a very hostile place for an attacker. If you place WAS on a machine in the DMZ, far more software must be installed on those machines, and more ports must be opened on the inner firewall so WAS can access the production network. This largely undermines the value of the DMZ.

2. Separate your production network from your intranet

Today, most organizations understand the value of a DMZ that separates the outsiders on the Internet from the intranet. However, far too many organizations fail to realize that many intruders are on the inside. You need to protect against internal as well as external threats. Just as you protect yourself against the large untrusted Internet, you should also protect your production systems from the large and untrustworthy Intranet. Separate your production networks from your internal network using firewalls. These firewalls, while likely more permissive than the Internet-facing firewalls, can still block numerous forms of attack. Applying this step and the previous step you should end up with a firewall topology like the one shown in Figure 5. For more information on firewall port assignments, see Firewall Port Assignments in WAS.

Notice that we've placed wsadmin on the edge of the firewall. This is attempting to show that while we prefer that wsadmin be run from only within the production network (within the protected area), we can run wsadmin through a firewall fairly easily. We've also shown EJB clients on the edge as they might be on either side of the firewall.

We've chosen to show only a single firewall and not a full DMZ facing the Intranet. This is the most common topology. However, we are increasingly seeing full DMZs (with a Web server in the internal DMZ) protecting the production network from the non-production Intranet. That is certainly a reasonable approach.

3. Enable global security

By default, WAS provides no security. This means that all network links are unencrypted and unauthenticated. As a result, any user with access to the deployment manager (HTTP to the Web admin console or SOAP/IIOP to the JMX management ports) can use the WAS administrative tools to perform any administrative operation, up to and including removing existing servers. Needless to say, this presents a great security risk.

Therefore, at a minimum, you should enable WAS global security in a production environment to prevent these trivial forms of attack. Once WAS security is enabled globally, the WAS internal links between the deployment manager and the application servers and traffic from the administrative clients (Web and command line) to the deployment manager are encrypted and authenticated (see Figure 5). Among other things, this means that administrators will be required to authenticate when running the administrative tools.

Enable Single Sign-On

When enabling WAS global security with LTPA, always enable Single Sign-On (SSO). This tells WAS to create LTPA cookies and send them back to the browser. It makes it possible for the application server to remember the user's identity across requests. If you do not enable SSO, WAS will not create a cookie and must reauthenticate the user on every request. In many cases, this will not work. With Form-based login without SSO, every login results in the user being returned to the login page because the redirect to the page after login never succeeds, as WAS has forgotten the user's identity immediately after login. More importantly, it is inefficient. Since every request results in a reauthentication of the user, the performance of the system can be quite poor.

We haven't mentioned SWAM because we prefer not to use it for several reasons:

- It will be deprecated in the future.

- It is not supported on WAS Network Deployment (ND).

- It relies on the HTTP session for security. Since the HTTP session is not designed to be secure, that isn't a great idea.

Be aware that enabling global security does not protect all network links, but rather a number of key internal links. We'll discuss securing additional network links later in this article. We will also discuss securing applications by leveraging WAS security now that it has been enabled.

4. Use HTTPS from the browser

If your site performs any authentication or has any activities that should be protected, use HTTPS from the browser to the Web server. If HTTPS is not used, information such as passwords, user activities, WAS session IDs, and LTPA security tokens can potentially be seen by intruders as the traffic travels over the external network.

While we can transmit LTPA tokens over an unencrypted channel, for maximum protection, it is best that they are sent over an encrypted link. If an LTPA token is successfully captured, the thief can impersonate the user identified until it expires.

5. Configure secure file transfer

Even when security is enabled, the deployment manager continues to communicate configuration updates to the node agents using an unauthenticated protocol. More precisely, node agents pull admin configuration updates from the deployment manager using an unauthenticated file transfer service. Thus, any foreign client can potentially connect to a deployment manager and upload arbitrary files. If numerous large files are uploaded, the operating system could run out of disk space, resulting in a total failure. It is also theoretically possible to download files that are being replicated from the deployment manager to nodes. However, given the brief and transient nature of these files, this is less likely.

To ensure that the deployment manager only responds to file transfer requests from trusted servers in the cell, install the filetransferSecured application and replace the existing insecure application. Because this application is a system application, it is not normally visible, but it is there. You must remove it and install a new one using wsadmin. IBM provides a script for this, but be aware that at the time of writing this article, the instructions in the V6 Information Center are not quite correct (and this is not documented in the V5 or V5.1 Information Centers). To install the filetransferSecured application, follow the steps below (we show a Windows example, but UNIX® is essentially the same).

cd <profilehome>\bin wsadmin.bat -user <wasadminuser> -password <waspassword> wsadmin>source ../../../bin/redeployFileTransfer.jacl wsadmin>fileTransferAuthenticationOn <your cell name> <dmgr node name> dmgr wsadmin>$AdminConfig saveWe are assuming a WAS ND environment. If you are using WAS base, the server name is obviously not dmgr. It is probably server1.

If we can't remember the cell name and node name, we can find them by looking under the config directory for the profile. Remember that the node is the node of the deployment manager, and thus the name likely ends in "CellManager."

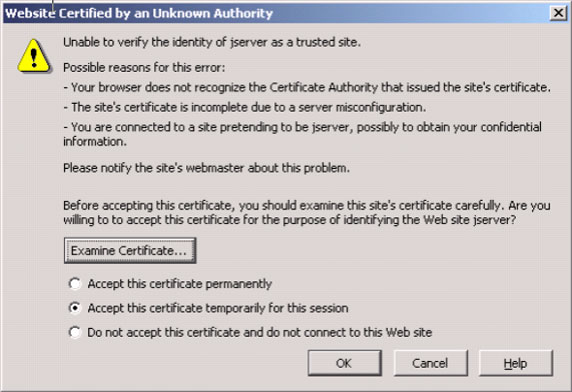

6. Configure certificates to ensure reasonable Web-based administrative certificate validation

When security is enabled and you use the Web administrative console, your Web browser will likely warn you that the certificate is not trusted and that the hostname does not match the hostname in the certificate. You should see messages like those in Figures 6 and 7 (we are using the Mozilla Web browser).

These messages are warnings indicating possible security problems related to the certificates that WAS is using. When you update the default keyring, we can take steps to prevent these warnings. First, generate a certificate where the Subject name is the same as the hostname of the machine running the administrative Web application (the one server in base or the deployment manager in other editions). That will take care of the second browser error message. The first message can be prevented by either buying a certificate from a well-known CA for the WAS's administrative console (this is probably overkill), or by accepting the certificate permanently. Keep in mind that if you ever see this message again, that is quite possibly a sign of a security breach. Thus, your system administration staff should be trained to recognize that this warning should only occur when the certificate is updated. If it occurs at other times, it's a red flag warning that the system has been compromised.

7. Protect JNDI

J2EE applications use JNDI to find other applications and resources. WAS therefore makes extensive use of JNDI. Unfortunately, the JNDI namespace is by default insecure. Everyone has read access, while all authenticated users have read/write access. As a result, any client with IIOP access to the cell (the JNDI namespace is accessible via IIOP remotely) can modify the namespace in arbitrary ways if they have a valid user ID and password in the WAS registry. Needless to say, this is quite a risk.

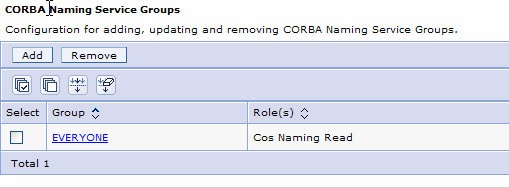

To address this, you should restrict access to the JNDI namespace by specifying something more restrictive administratively using the Environment => Naming => CORBA Naming Service Groups area in the admin console. We recommend you restrict the namespace such that only "Everyone" has read access and no one has read/write access. This is reasonably safe as there is nothing sensitive stored in JNDI (passwords are not stored there). An intruder could potential determine server endpoints and such, but little else. The key is to prevent malicious updates. Notice that we've removed the default ALL AUTHENTICATED grants.

This setting ensures that attackers cannot modify the JNDI namespace. This does not impact WAS's own operations (including registering resources in JNDI) because it always grants itself the needed permissions. However, applications that write to JNDI will fail. Since this is rare, this should not be an issue in most situations. If some applications need write access to JNDI, you'll have to grant them the needed permissions. Note that JNDI permissions apply to the entire namespace, not just one part.

8. Ensure meaningful authorization on default messaging

By default, the service integration bus (SIB) has no meaningful authorization, even when WAS security is enabled. This means that any party with a valid user ID and password in the WAS registry (which could be anyone in your company) can perform any action against any queue. None of this is visible or configurable via the administration console, so you are going to have to use wsadmin. The messaging bus admin tasks are controlled by the wsadmin $AdminTask command. If you use listGroupsInBusConnectorRole, you will quickly discover that, by default, all authenticated users have access to the bus. That's not very secure!

We recommend that you immediately use the removeGroupFromBusConnectorRole and addUserToBusConnectorRole/addGroupToBusConnectorRole tasks to correct this. For example:

wsadmin>$AdminTask removeGroupFromBusConnectorRole {-bus mySIB -group AllAuthenticated}

wsadmin>$AdminTask addUserToBusConnectorRole {-bus mySIB -user bususeryoudetermine}Of course, once you configure authorization to limit the parties that can connect, you'll need to ensure that your client components (MDBs, JMS, etc) authenticate using appropriate identities.

9. Restrict access to WebSphere MQ messaging

If you are using WebSphere MQ as your messaging provider, you'll need to address queue authorization via other techniques. WebSphere MQ, by default, does not perform any user authentication when using client/server mode (bindings mode relies on process to process authentication within a machine). In fact, when you specify a user ID and password on the connection factory for WebSphere MQ, those values are ignored by WebSphere MQ.

One option to address this is to implement your own custom WebSphere MQ authentication plugin on the WebSphere MQ server side to validate the user ID and password sent by WAS. A second, and likely simpler technique is to configure WebSphere MQ to use SSL with client authentication, and then ensure that only the WAS server possesses acceptable certificates for connecting to WebSphere MQ (leveraging the SSL client authentication trick mentioned earlier).

10. Encrypt WAS to LDAP link

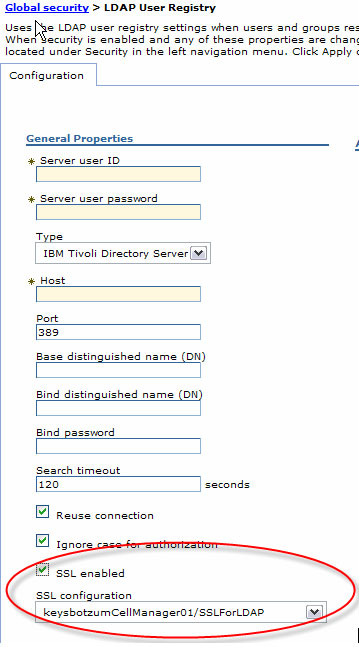

When using an LDAP registry, WAS verifies a user's password using the standard ldap_bind. This requires that WAS send the user's password to the LDAP server. If that request is not encrypted, a hacker could use a network sniffer to steal the passwords of users authenticating (including administrative passwords!). Most LDAP directories support LDAP over SSL, and WAS can be configured to use this. On the LDAP user registry panel (see Figure 9), check the use SSL option and then configure an SSL Configuration appropriate to your LDAP directory. You'll most likely need to place the signing key for the LDAP server's certificate) into the trust store. It's best to create a new SSL Configuration just for LDAP to avoid causing problems with the existing SSL usage.

If you use a custom registry, you'll want to secure this traffic using whatever mechanism is available.

11. Change the default key file

When changing the default certificates, there is one important thing to keep in mind. As with any SSL-based system, the client must be able to verify the certificate presented by the server. This requires that the client hold the signing certificate for the signer that signed the certificate presented by the server.

When WAS ships, all of this is taken care of for Java clients, including the IBM provided tools such as the wsadmin and Tivoli Performance Viewer (for versions prior to V6), as well as custom-written clients. However, when you update the server key file to use a new private key and certificate signed by some signer, ensure that the Java clients can verify those certificates. You will need to update the client trust file to contain the signer certificate for the party that issued your new server certificates. This means that any Java clients, such as the WAS admin clients, may need new client key files. If you use certificates issued from a well-known CA, such as Verisign, this will not be necessary since the default client key rings include signing certificates from the most common public CAs already. This is not a recommendation to buy certificates from a CA for WAS internal communication. If you have few Java clients, it is usually easier and cheaper to simply generate a self-signed certificate and update the small number of client keyrings manually.

This issue also affects the Web server to application server communication. Because the Web server plug-in supports HTTPS by default, the Web server plug-in keyring contains a copy of the WAS sample signing certificate. If you change the default keyring, do not forget to update the Web server plug-in keyring to include the new certificate.

Enabling WAS security causes most internal traffic to use SSL to protect it from various forms of network attack. However, in order to establish an SSL connection, the server must posses a certificate and corresponding private key. To simplify the initial installation process, WAS is delivered with a sample key file containing a sample private key. This "private" key is included in every copy of WAS sold. As such, it is not very private. The name of the key file, DummyServerKeyFile, makes this clear.

To protect your environment, you should create your own private key and certificate for communication. All of this is done using the ikeyman tool. Using ikeyman, create a new keystore and truststore, and then update the existing SSL configurations to use these new files.

When you create a new keystore and truststore, keep in mind that every signer that you place in the truststore is a statement about trust. You are trusting that signer to identify principals in the system. If you trust multiple signers, there is the very real risk that multiple signers will create certificates for what appear to be the same user depending on how certificates are mapped to user identities. If you are using certificates for client authentication, to reduce this risk, you should reduce the number of signers in the trust store to the minimum number possible.

While we are talking about certificates, we want to digress briefly on a crucial point -- certificates expire. Should the WAS certificates expire, WAS will stop working. No communication will be possible. Thus, when you create new certificates as we recommend, ensure that you mark the expiration date on a calendar. If, against our advice, you use the default keys, remember that they expire as well. You must actively plan for certificate expiration and obtain or generate new certificates prior to their expiration.

12. Harden the Web server host

If you are following the standard topology recommended earlier, your Web server is running in the DMZ. Since a DMZ is the front line defense against potential intruders, special care must be taken to harden this server.

This article does not discuss the specifics of Web server hardening, but you should look hard at things like operating system hardening, limiting the Web server modules being loaded, and other Web server configuration steps. See Building Secure Servers with LINUX for more information on these topics.

Web server management (V6 only)

When hardening your Web server, there is one WAS specific item that we need to consider. It is possible for the WAS administrative infrastructure to manage Web servers. While this seems like a good thing from an ease of use perspective, this raises serious security issues. There are two ways we can manage a Web server: using a managed node and using the native IBM HTTP Server (IHS) Admin Server.

Using a managed node requires that you place a node agent on the Web server machine (which is typically in the DMZ) and that this agent be part of the WAS cell. This is completely unacceptable from a security perspective, and thus should not be used except in those rare cases where there is no need for a DMZ. This is unacceptable for two reasons:

- A node agent is a fully functional member of the cell and has full administrative authority. If it is compromised in the DMZ, the entire cell is compromised.

- WAS is a large and powerful product, and therefore it is difficult to harden as this article shows. Such products don't belong in the DMZ.

The second approach requires using IBM HTTP Server and configuring the HTTP Admin Server. The deployment manager sends administrative requests to the HTTP Admin Server running on the Web server host. This seems like a reasonable approach, but some may be concerned about even this type of traffic.

13. Don't run samples in production

WAS ships with several excellent examples to demonstrate various parts of the product. These samples are not intended for use in a production environment. Do not run them there, as they create significant security risks. In particular, the showCfg and snoop servlets can provide an outsider with tremendous amounts of information about your system. This is precisely the type of information you do not want to give a potential intruder. This is easily addressed by not running server1 (that contains the samples) in production. If you are using WAS base, you'll actually want to remove the examples from server1.

14. Review Web server trust boundaries

When configuring your environment, always be careful to consider trust boundaries. It is unfortunately far too easy to accidentally create a weak secure environment by inappropriately extending the trust boundary. Normally, WAS performs its own authentication using strong mechanisms. But, it is possible for WAS to trust other components in your infrastructure to perform the authentication. If done properly, there is no problem. However, if done improperly or without careful consideration, we can create an insecure system, or at least one with poorly understood trust relationships. Remember the old adage -- security is only as strong as its weakest link! Carefully limit the WAS trust domain.

Extension of the WAS trust domain typically occurs explicitly when writing TAIs, login modules, or other custom code, but it can also occur implicitly, for example, when using certificates.

When using client certificate authentication for Web clients, realize that the Web server is now part of your trust domain. The WAS plugin forwards information about the user's certificate to the application server. The application server must trust that the Web server has done proper certificate authentication. WAS can't repeat the SSL authentication handshake (only the SSL terminator can do that). Therefore, compromise of the Web server will compromise WAS security completely.

Further, when you are using client certificate authentication to the Web server, since WAS now completely trusts the Web server, you should configure authenticated HTTPS between the Web server and the application server to avoid attacks that spoof the certificate information. This brings us to the next topic.

15. Consider authenticating Web server to WAS HTTP link

The WAS Web server plug-in forwards requests from the Web server to the target application server. By default, if the traffic to the Web server is over HTTPS, then the plug-in will automatically use HTTPS when forwarding the request to an application server, thus protecting its confidentiality.

Further, with some care, we can configure the application servers (which contain a small embedded HTTP listener) to only accept requests from known Web servers. This prevents various sneak attacks that bypass any security e in front of or in the Web server, and creates a trusted network path. This type of situation might seem unlikely, but it is possible. Some examples:

- You have an authenticating proxy server that just sends the user ID as an HTTP header without any authentication information. An intruder that can access the Web container directly can become anyone simply by providing this same header. Tivoli Access Manager WebSEAL does not have this weakness.

- You are using client certificate authentication to the Web server. When this occurs, the WAS plugin forwards the certificate information from the Web server to application server and trusts it. Thus, direct access to the Web container by an intruder will compromise WAS security completely when client certificates are being used because the intruder can assert any valid certificate and become anyone.

To create a trusted network path from the Web server to the application server, you configure the application server Web container SSL configuration to use client authentication. Once you have ensured that client authentication is in use, we need to ensure that only trusted Web servers can contact the Web container. To do this, limit the parties that have access by applying the SSL trick. Specifically in this case, you'll need to:

- Create a key store and trust store for the Web container and a key store for the Web Server plug-in using ikeyman.

- Delete from all key store, including the trust store, all of the existing signing certificates. At this point, we cannot use any key store to validate any certificates. That's intentional.

- Create a self-signed certificate in the two key stores and export just the certificate (not the private key).

- Import into the plug-in key store the certificate exported from the Web container key store. Import into the Web container trust store the plug-in certificate. Now each side contains only a single signing certificate. This means we can verify exactly one certificate -- the self-signed certificate created for the peer.

- Install the newly created key stores into the Web container and Web server plug-in.

16. Protect private keys

WAS maintains several sets of private keys. The two most important examples include the primary keystore for internal communication, and the keystore used for communication between the Web server and the application server. These private keys should be kept private and not shared. Since they are stored on computer file systems, those file systems must be carefully protected. Given the sensitive nature of the information in the keystore, even placing it on a shared network accessible file system is inadvisable.

Also be careful to avoid incidental sharing of these keys. For example, do not use the same keys in production as in other environments. Many people will have access to development and test machines and their private keys. Guard the production keys carefully.

17. Configure and use trust association interceptors carefully

TAIs are often used to allow WAS to recognize existing authentication information from a Web SSO proxy server, such as Tivoli Access Manager WebSEAL. Generally, this is fine. However, be careful when developing, selecting, and configuring TAIs. A TAI extends the trust domain. WAS is now trusting the TAI and whatever the TAI trusts. If the TAI is improperly developed or configured, it is possible to completely compromise the security of WAS. If you custom-develop a TAI, ensure that the TAI carefully validates the parameters passed in the request and that the validation is done in a secure way. We've seen TAIs that perform foolish things such as simply accepting a username in an HTTP header. That's useless unless care is taken to ensure that all traffic received at WAS must be sent via the authentication proxy. For example, using the techniques described earlier, and that the authentication proxy will always override an HTTP header set by the client since HTTP headers can be forged.

WebSEAL TAI configuration

TAI version note

Mutual SSL authentication is no longer supported by the newer WebSEAL TAI included with WAS V5.1.1 and later, and known by the class name TAMTrustAssociationInterceptorPlus. We can still configure mutual SSL in WAS (and doing so is often useful), but the TAI won't honor it. You must use password-based authentication from WebSEAL with the newer TAI. The older TAI (WebSealTrustAssociationInterceptor) continues to be included if you wish to use it.

The trust relationship between WAS and WebSEAL is crucial because the WebSEAL TAI within WAS is accepting identity assertions from WebSEAL. If that link is compromised, an intruder could then assert any identity and completely undermine the security of the infrastructure. The trust relationship between WAS and WebSEAL is established through one of two mechanisms:

- mutual SSL authentication

- password based authentication

Either mechanism is appropriate within a secure environment. However, each must be configured properly or serious security breaches are possible. In either case, WebSEAL sends the end user's user ID as an iv-user header on the HTTP request. The difference between the two configurations is how WebSEAL proves itself to the application server.

WebSEAL password configuration

When password authentication is used, WebSEAL sends its user ID and password as the basic auth header in the HTTP request (the user's user ID is in the iv-user header). Password based authentication is configured in two places. First, TAM WebSEAL must be configured to send its user ID and password to the application servers for the junction being configured. This password is, of course, a secret that must be carefully protected. The WebSEAL TAI validates this password against the registry when it is received.

If the LoginId property is not set on the WebSEAL properties, then the TAI will verify the user ID and password combination sent from WebSEAL and trust it if it is any valid user ID and password combination. This is not a secure configuration because this implies that any person knowing any valid user ID and password combination can connect to WAS and assert any user's identity. When you specify the LoginId property, the WebSEAL TAI ignores the inbound user ID in the basic auth header and verifies the LoginId and WebSEAL password combination. In that case, there is then only one (presumably closely guarded secret) valid password sent from WebSEAL. You should, of course, configure SSL from WebSEAL to the application server to ensure that the secret password is not sent in cleartext.

WebSEAL mutualSSL configuration

Mutual SSL is configured through three separate and critically important steps:

- You must configure WebSEAL to use SSL to communicate with WAS and that SSL configuration must include a client certificate known only to the application server Web container.

- You must configure the application server Web container to perform client certificate authentication.You also must alter its trust store to include only the client certificate that WebSEAL is using. This step is crucial because this is how we guarantee that requests to the application server Web container are coming only from WebSEAL and not some intruder (using mutually authenticated SSL is not sufficient). You must also remove from the Web container the non-HTTPS transports to ensure that only mutually authenticated SSL is used when contacting the server.

- You must configure the WebSEAL TAI with mutualSSL=true in its properties. However, understand that this last step merely states to the TAI that it should assume the connection is secure and that it is using mutually authenticated SSL. If either of the two previous steps is not configured exactly correctly, your environment is now completely insecure. Thus, the choice to use mutualSSL must be taken with great care. Any configuration mistakes will result in an environment where any user can be impersonated.

If you add a Web server to the mix, things get even more complicated. In this case, carefully configure a mutual SSL configuration between WebSEAL and the Web server, and a second between the Web server plugin and the WAS Web container.

18. Use only the new LTPA cookie format

WAS V5.1.1 introduced a new LTPA cookie format (LTPAToken2) to support subject propagation (see Advanced Authentication in WAS). While doing this, some theoretical weaknesses in the old format were also addressed. Bear in mind that these weaknesses are theoretical. No known compromises have occurred. It is desirable to use the new stronger format, unless you have to use the older format.

The new LTPA token uses the following strong cryptographic techniques:

- Random salt

- Strong AES based ciphers

- Data is signed

- Data is encrypted.

For the curious, a 1024 bit RSA key pair is used for signing and a 128 bit secret key (AES) is used for encryption. The cipher used for encryption is AES/CBC/PKCS5Padding.

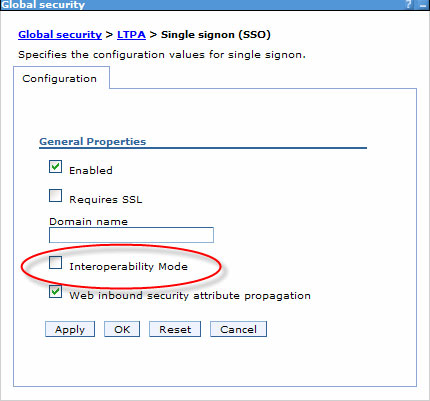

Both the old and new cookie format are supported simultaneously by default to ensure compatibility with other systems, such as older versions of WAS, Lotus® Domino®, and TAM WebSEAL, when it creates LTPA cookies. If you don't need this compatibility, you should disable it. To do so, go to the Security => Authentication Mechanisms => LTPA => SSO configuration panel and unselect interoperability mode.

19. Don't specify passwords on the command line

Once security is enabled, the WAS administrative tools require that you authenticate in order to function. The default or obvious way to do this is to specify the user ID and password on the command line as parameters to the tools. Do not do this. This exposes your administrative password to anyone looking over your shoulder. And, on many operating systems, anyone that can see a list of processes can see the arguments on the command line. Instead, ensure that the administrative tools prompt for a user ID and password. As of WAS V6.0.2, all of the administrative tools prompt automatically for a user ID and password if one isn't provided on the command line. No further action is required.

If you are using an older version of WAS, we can force the tools to prompt by telling them to use RMI (the default is SOAP) communication. The RMI engine prompts when needed. To do this, simply specify

-conntype RMI -port <bootstrap port>. Here's an example of starting wsadmin this way to connect to a deployment manager listening on the default port:

wsadmin.sh -connectype RMI -port 9809You may find it annoying that the command line tools prompt graphically for a user ID and password. Fortunately, we can override this behavior. We can force the tools to use a simple text based prompt. To do this, change the loginSource from prompt to stdin by editing the appropriate configuration file. By default, the administrative tools use SOAP, and thus we need to edit the soap.client.props file. If you are using RMI, edit sas.client.props. Look for the loginSource property in the appropriate file and change it to specify stdin.

20. Carefully limit trusted signers

When using certificate authentication (client or server), we need to understand that each signer in the trust store represents a trusted provider of identity information (a certificate). You should trust as few signers as possible. Otherwise, it is possible that two signers might issue certificates that map to the same user identity. That would create a serious security hole in your architecture.

You should review the trust stores on the clients and servers using ikeyman and remove any signers which are not needed.

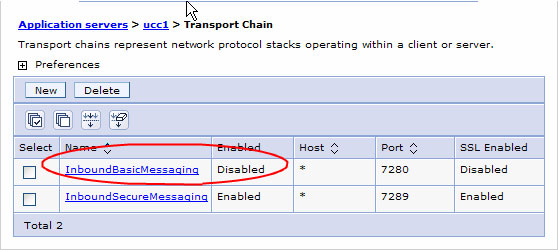

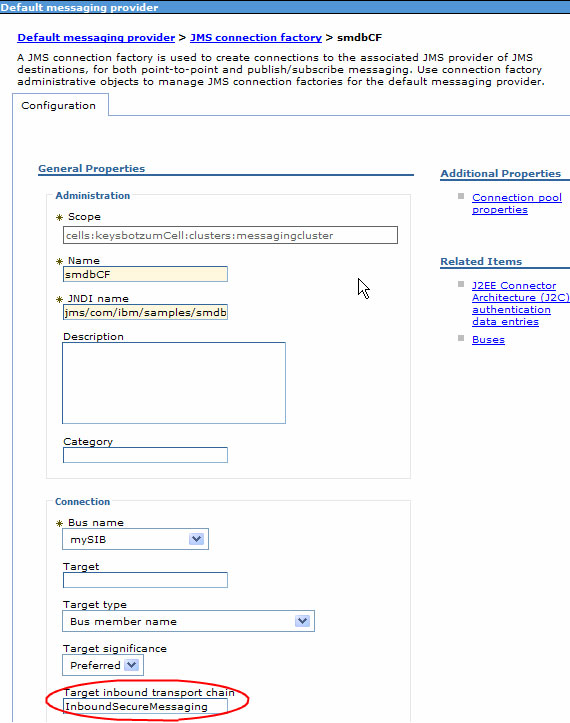

21. Encrypt default messaging links

By default, messaging occurs over unencrypted links. This is obviously undesirable. To correct this, you'll need to make some configuration changes. For each application server (not cluster), go to the messaging engine inbound transport panel and disable the InboundBasicMessaging transport. Once you've done this, clients will only be able to use the InboundSecureMessaging transport.

Version note

The ability to specify the transport chain for MDBs was added with WAS V6.0.2.

To complete the task then configure every client to use the InboundSecureMessaging transport. This requires specifying this transport in one of several places depending on the client type. Ordinary JMS clients (even server side) will need to specify the InboundSecureMessaging transport on the connection factory panel.

MDBs need InboundSecureMessaging specified on the activation specification panel. Finally, if cross bus communication is being used, you need to specify InboundSecureMessaging as the inter-engine transport chain on the bus configuration panel.

See Administering messaging security for more information.

22. Encrypt WebSphere MQ nessaging links

If you are using MQ rather than the default messaging provider, you should of course use SSL to MQ. This is done by specifying the SSLCIPHERSUITE property.

23. Encrypt Distribution and Consistency Services transport link

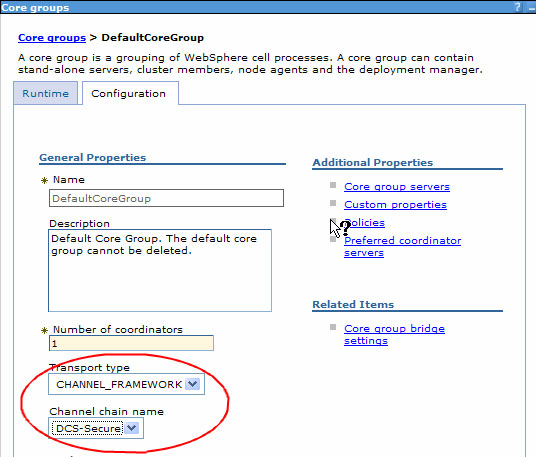

Core groups rely on Distribution and Consistency Services (DCS), which uses a reliable multicast message (RMM) system for transport. RMM can use one of several wire transport technologies. To maximize security, use encrypted links. To ensure this for each core group, select a transport type of channel framework and DCS-Secure as channel chain.

Note that DCS always authenticates messages when global security is enabled. Once the transport is encrypted, you have a highly secure channel.

Once you have done this, all services that rely on DCS are now using an encrypted and authenticated transport. Those services are DynaCache, memory to memory session replication, core groups, Web services caching, and stateful session bean persistence.

24. Encrypt Distributed Replication Service network links

If you are using WAS V6 and have configured DCS to use an encrypted link, this step is unnecessary since Distributed Replication Service (DRS) runs over the DCS link.

DRS traffic is not encrypted by default even when global security is enabled. DRS encryption is configured by specifying DES or 3DES as the encryption type on the replication domain configuration panel (environment => replication domains => yourdomain).

25. Protect application server to database link

Just as with any other network link, confidential information may be written to or read from the database. Although most databases support some form of authentication, not all support encrypting JDBC traffic (see your database vendor's documentation) between the client (WAS applications, in this case) and the database. Thus, recognize this weakness and take appropriate steps. Some form of network-level encryption, such as a Virtual Private Network (VPN), perhaps using IP Security Protocol (IPSEC), is the most obvious solution, although there are other reasonable choices. If we can place your database near WAS (in the network sense), various forms of firewalls and simple routing tricks can greatly limit the access to the network traffic going to the database. The key here is to identify this risk and then address it as appropriate.

26. Create separate administrative user IDs

When WAS security is configured, a single security ID is initially configured as the Security Server ID. This ID is effectively the equivalent of root in WAS and can perform any administrative operation. Because of the importance of this ID, it is best not to widely share the password.

Console login note

As of WAS V6, you'll find that we cannot login to the admin console as an administrator (other than the security server ID) once you've configured JNDI hardening, as suggested earlier. You'll need to specifically grant all of the CosNaming permissions to your administrators so they can login.

As with most systems, WAS does allow multiple principals to act as administrators. Simply use the administrative application and go to the System Administration/Console Users (or Groups) section to specify additional users or groups that should be granted administrative authority. When you do this, each individual person can authenticate as himself or herself when administering WAS. Note that all administrators have the same authority throughout the cell. WAS does not support instance-based administrative authorization.

All administrative actions that result in changes to the configuration of WAS are audited by the deployment manager, including the identity of the principal that made the change. These audit records are more useful if each administrator has a separate identity. Audit records are treated as serious messages and sent, by default, to SystemOut.log from the deployment manager.

The approach of giving individual administrators their own separate administrative access is handy in an environment where central administrators administer multiple WAS cells. We can configure all of these cells to share a common registry, and thus the administrators can use the same ID and password to administer each cell, while each cell has its own local "root" ID and password.

27. Take advantage of administrative roles

WAS allows for four administrative roles: administrator, operator, monitor, and configurator. These roles make it possible to give individuals (and automated systems) access appropriate to their level of need. We strongly recommend taking advantage of roles whenever possible. By using the less powerful roles of monitor and operator, we can restrict the actions an administrator can take. For example, we can give the less senior administrators the ability to start and stop servers; and the night operators the ability the watch the system (monitor). These actions greatly limit the risk of damage by trusting people with only the permissions they need.

One interesting role is the monitor role. By giving a user or system this access level, you are giving only the ability to monitor the system state. We cannot change the state, nor can you alter the configuration. For example, if you develop monitoring scripts that check for system health and have to store the user ID and password locally with the script, use an ID with the monitor role. Even if the ID is compromised, little serious harm can result.

28. Remove JDKs left by Web server and plugin installers

When you install IBM's HTTP Server, the installer leaves behind a JDK in the _jvm directory of the installation root. As this is a full JDK (which includes development tools) this should not be on a machine in the DMZ. You should remove this JDK. Keep in mind that this will make it impossible to run some tools, such as ikeyman, on this Web server. We don't consider that a significant issue because running such tools in the DMZ is problematic.

When you install the WAS HTTP Server plugin using the IBM installer, it also leaves behind JDKs -- two in this case. The first JDK is used by the uninstaller and is in the _uninstPlugin directory under the plugin installation root. The second JDK is in the java directory and is used by ikeyman and other Java tools. As before, you should remove this during post-installation.

If you do choose to remove the JDKs, make a backup copy just in case for future use. One technique might be to zip or tar the JDK and replace it later, such as when it would be needed for the WAS update installer when applying fixes, and then zip/tar and remove it again when the process is complete.

29. Choose appropriate process identity

The WAS processes run on an operating system and must therefore, run under some operating system identity. There are three ways to run WAS with respect to operating system identities:

- Run everything as root.

- Run everything as a single user identity, such as "was".

- Run the node agents as root and individual application servers under their own identities.

IBM tests for and fully supports the first two approaches. The third approach may seem tempting because we can then leverage operating system permissions, but it isn't very effective in practice for the following reasons:

- It is difficult to configure and there are no documented procedures. Many WAS processes need read access to numerous files and write access to the log and transaction directories.

- By running the node agent as root, you effectively give the WAS administrator and any applications running in WAS root authority.

- The primary value of this approach is to control file system access by applications. We can achieve this using Java 2 permissions.

- This approach creates the false impression that applications are isolated from each other. They are not. The WAS internal security model is based on J2EE and Java 2 security and is unaffected by operating system permissions. Thus, if you choose this approach to protect yourself from "rogue" applications, your approach is misguided.

The first approach is obviously undesirable because, as a general best practice, it is best to avoid running any process as root if it can be avoided. This leaves the second approach, which is fully supported and provides application isolation (should that be desirable) when used in conjunction with Java 2 security. Therefore, we recommend that approach.

Once you have chosen a WAS process identity, you should limit file system access to WAS's files by leveraging operating system file permissions. WAS, like any complex system, uses and maintains a great deal of sensitive information. In general, no one should have read or write access to most of the WAS information. In particular, the WAS configuration files (<root>/config) contain configuration information as well as passwords.

Do not take this too far. We've seen far too many cases where during development, developers aren't allowed to even see the application server log files. Such paranoia is unwarranted. During development, maximal security is not productive. During production, you should lock down WAS as much as possible. During development, be more lenient.

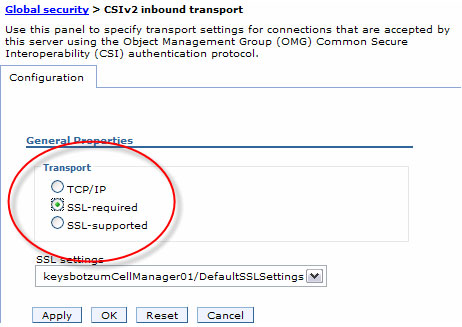

30. Enforce CSIv2 transport SSL use

When WAS servers and clients communicate using CSIv2 IIOP, they negotiate the transport security. Whatever is acceptable to both parties is chosen. Generally, that is fine, but you should be aware of one potential weakness. WAS supports CSIv2 over SSL or cleartext. By default, both parties will typically negotiate to use SSL, thus ensuring an encrypted communication channel. However, if either party in the negotiation requests cleartext, then cleartext will be used. You may not even realize your traffic is being sent in the clear! This might happen, for example, if a client was misconfigured. If you want to guarantee that traffic is encrypted (and you should), it is safer to ensure that SSL is always used.

We can ensure that SSL is used for IIOP by indicating that it is required, and not optional on the CSIv2 transport panel (Security => Authentication Protocol => CSIv2 Inbound Transport). You should do the same for the CSIv2 Outbound Transport.

31. Keep up to date with patches and fixes

As with any complex product, IBM occasionally finds and fixes security bugs in WAS, IBM HTTP Server, and other products. It is crucial that you keep up to date on these fixes. It is advisable that you subscribe to support bulletins for the products you use, and in the case of WAS, monitor the recommended updates support page. These bulletins often contain notices for recently discovered security bugs and fixes. We can be certain that potential intruders learn of those security holes quickly. The sooner you act, the better.

Be aware that WAS security fixes are usually rolled into the next cumulative fix for every supported release and then will no longer be listed on the recommend updates page. Therefore you will need to keep up with cumulative fixes if you want all known security holes to be closed.

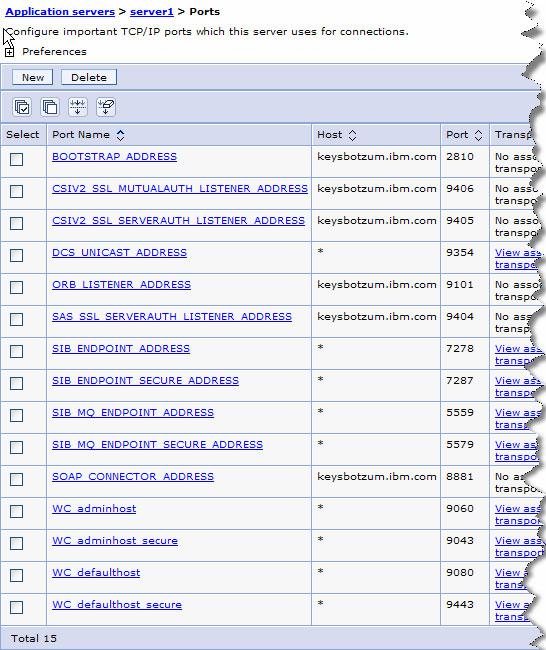

32. Disable unused ports

A basic principle of security hardening is to minimize the attack surface for potential attacks. This is event true when there are no known security issues with a given service. If the service is not required for the site to correctly function, it should be removed to minimize the likelihood of an attacker taking advantage of this additional function at some point in the future. Examine Figure 15 and you'll see that a typical WAS application server is listening on a lot of ports.

If a given service is not required, then we can disable its listening ports. Looking at this list, potential candidates for disabling are:

- SAS_SSL_SERVERAUTH_LISTENER_ADDRESS -- used for compatibility with WAS V4 and earlier releases. This is the old IIOP security protocol. CSIv2 replaces it as of V5.

- SIB_ENDPOINT_* -- used by the built in messaging engine. If you aren't using messaging, you don't need it either.

- SIB_MQ_* -- used by the messaging engine when connecting with WebSphere MQ.

- WC_adminhost* -- used for administrative Web browser access. These are already disabled in an application server that is part of a cell.

- WC_defaulthost* -- the default Web container listening ports. If you've added custom listener ports, these might not be needed.

Different ports require different techniques for disabling them, depending on how they are implemented:

- The SAS_SSL_SERVERAUTH_LISTENER_ADDRESS can be taken out of service by selecting CSI as the active protocol on the global security panel.

- The WC_* ports are all for the Web container. They can best be removed, modified, or disabled from the Web container transport chain configuration panel (application servers => servername => web container => transport chain). The only listening Web ports we need are those used by your applications.

- The SIB_* ports are not started unless the messaging engine is enabled, so no action is necessary for them.

Application-based preventative measures: configuration

At this point, we have focused on the basic steps that a WAS architecture and the administration team can take to ensure that they create a secure infrastructure. That's obviously an important step, but it is not sufficient. Now that the infrastructure has been hardened, we must examine things that applications need to do to be secure. Applications will need to take advantage of the infrastructure provided by WAS, but there are also numerous other actions that application developers need to take. We'll detail many of those issues here.

33. Never set Web server doc root to WAR

WAR files contain application code and lots of sensitive information. Only some of that information is Web-servable content. Thus, it is inappropriate to set the Web server document root to the WAR root. If you do this, the Web server will serve up all the content of the WAR without interpretation. This will result in code, raw JSPs, and more being served up to end users.

Note that this recommendation only applies when a Web server is co-located with an Application Server -- a configuration that we do not recommend.

34. Carefully verify that every servlet alias is secure

WAS secures servlets by URL. Each URL that is to be secured must be specified in the web.xml file describing the application. If a servlet has more than one alias (that is, multiple URLs access the same servlet class), or there are many servlets, it is easy to accidentally forget to secure an alias. Be cautious. Since WAS secures URLs, not the underlying classes, if just one servlet URL is insecure, an intruder might be able to bypass your security. To prevent this, whenever possible, use wildcards to secure servlets. If that is not appropriate, carefully double-check your web.xml file before deployment.

The alias problem is further aggravated by the feature known as serve servlets by classname, which brings us to our next recommendation.

35. Don't serve servlets by classname

We can serve servlets by classname or via a normal URL alias. Normally, applications choose the latter. That is, developers define a precise mapping from each URL to each servlet class in the web.xml file by hand, or by using one of the various WAS development tools.

However, WAS also lets you serve servlets by classname. Instead of defining a mapping for each servlet, a single generic URL (such as /servlet) serves all servlets. The component of the path after the base is assumed to be the classname for the servlet. For example, "/servlet/com.ibm.sample.MyServlet" refers to the servlet class "com.ibm.sample.MyServlet."

Serving servlets by classname is accomplished by setting the serveServletsByClassnameEnabled property to true in the ibm-web-ext.xmi file, or by checking serve servlets by classname in the WAR editor of IBM Rational® Application Developer. Do not enable this feature. This feature makes it possible for anyone that knows the classname of any servlet to invoke it directly. Even if your servlet URLs are secured, an attacker may be able to bypass the normal URL-based security. Further, depending on the classloader structure, an attacker may be able to invoke servlets outside of your Web application.

35. Do not place sensitive information in WAR root

WAR files contain servable content. The Web container serves HTML and JSP files found in the root of the WAR file. This is fine as long as you place only servable content in the root. Thus, never place content that shouldn't be shown to end users in the root of the WAR. For example, don't put property files, class files, or other important information there. If place information in the WAR, place it within the WEB-INF directory, as allowed in the servlet specification. Information there is never served by the Web container.

36. Define a default error handler

When errors occur in a Web application or even before the application dispatch (if for example WAS can't find the target servlet), an error message is displayed to the user. By default, WAS will display a raw exception stack dump of the error. Not only is this incredibly unfriendly to the end user, it also reveals information about the application. Names of classes and methods are in the stack information. The exception message is also displayed; this message might contain sensitive information.

It is best to ensure the end users never see a raw error message. While we can in every single servlet ensure that exceptions are always caught, this doesn't cover every case (as we said earlier). Instead, you should define a default error page. The default error page will be displayed whenever an unhandled exception occurs. This page can be a friendly error message rather than a stack trace. The default error page is defined in ibm-web-ext.xmi using the defaultErrorPage attribute. It can also be set in Rational Application Developer using the Web Deployment Descriptor editor (extensions tab).

37. Consider disabling file serving and directory browsing

We can further limit the risk of inappropriate serving of content by disabling file serving and directory browsing in your Web applications. If the WAR contains servable static content, we need to enable file serving.

38. Enable session security

WAS does not normally enforce any authorization of HTTP Session access. Any party with a valid session identifier can access any session. While session identifiers aren't likely to be guessable, it may be possible to obtain session identifiers through other means.

To reduce the risk of this form of attack, you should consider enabling session security. This setting is configured on the application server => <server name> => web container => session management panel. Check the box labeled security integration. Once this is done, WAS will track what user (as determined by the LTPA credential that they present) owns what session, and will ensure that only that user can access that session.

In rare cases, this setting can break Web applications. If the application contains a mixture of secure (those with authorization constraints) and insecure servlets, the insecure servlets cannot access the session object. Once a secure servlet accesses the session, it is marked as "owned" by that user. Insecure servlets, which run as anonymous, will receive an authorization failure if they try to access those pages.

39. Beware of custom JMX network access

JMX and custom MBeans make it possible to support powerful remote customized administration of your applications. Be aware that JMX MBeans are network accessible. If you choose to deploy them, do so carefully and ensure that they have proper authorization on their operations. WAS will automatically provide default authorization restrictions for MBeans based on information in the descriptor in the MBean JAR file. Whether this is appropriate depends on your application. See IBM WebSphere: Advanced Deployment and Administration for more information.

Application-based preventative measures: design and implementation

Now we turn our attention to the actions that application developers and designers must take to build a secure application. These steps are crucial and, sadly, often overlooked.

40. Use WAS security to secure applications

Usually, application teams recognize that they need some amount of security in their application. This is often a business requirement. Unfortunately, many teams develop their own security infrastructure. While this is possible to do well, it is very difficult, and most teams do not succeed. Instead, there is the illusion of strong security, but in fact the system security is quite weak. Security is simply a hard problem. There are subtle issues of cryptography, replay attacks, and various other forms of attack that are easily overlooked. The message here is that you should use WAS security unless it truly does not meet your needs. This is rarely the case.

Perhaps the most common complaint about the J2EE-defined declarative security model is that it is not sufficiently granular. For example, we can only perform authorization at the method level of an EJB or servlet, not at the instance level. In this context, a method on a servlet is one of the HTTP methods: GET, POST, PUT, and so on. Thus, for example, all bank accounts have the same security restrictions, but you would prefer that certain users have special permissions on their own accounts.

This problem is addressed by the J2EE security APIs (isCallerInRole and getCallerPrincipal). By using these APIs, applications can develop their own powerful and flexible authorization rules, but still drive those rules from information that is known to be accurate -- the security attributes from the WAS runtime.

An example of weak security

Here is one quick example of a weak security system. Applications that don't use WAS security tend to create their own security tokens and pass them within the application. These tokens typically contain the user's name and some security attributes, such as their group memberships. It is not at all uncommon for these security tokens to have no cryptographically verifiable information. The presumption is that we can make security decisions based on the information in these tokens. This is false. The tokens simply assert user privileges.

The problem here is that any Java program can forge one of these security objects, and then possibly sneak into the system through a back door. The best example of this is when the application creates these tokens in the servlet layer and then passes them to an EJB layer. If the EJB layer is not secured (see the next section), intruders can call an EJB directly with forged credentials, rendering the application's security meaningless. Thus, without substantial engineering efforts, the only reliable secure source of user information is the WAS infrastructure. (Note that we can integrate the WAS security infrastructure with other authentication or authorization products. For example, Tivoli Access Manager can provide authentication and authorization support.)

41. Secure every layer of the application (particularly EJBs)

All too often, Web applications are deployed with some degree of security (home-grown or WAS-based) at the servlet layer, but the other layers that are part of the application are left unsecured. This is done under the false assumption that only servlets need to be secured in the application because they are the front door to the application. But, as any police officer will tell you, you have to lock the back door and windows to your home as well.

There are many ways for this to occur, but this is most commonly seen when EJBs are used as part of a multi-tier architecture when Java clients aren't part of the application. In this case, developers often assume that the EJBs do not need to be secured since they are not "user-accessible" in their application design, but this assumption is dangerously wrong. An intruder can bypass the servlet interfaces, go directly to the EJB layer, and wreak havoc if you have no security enforcement at that layer. This is easy to do with available Java IDEs that can inspect running EJBs, obtain their metadata, and dynamically create test clients. Rational Application Developer is capable of this, and developers see this every day when they use the integrated test client.

Often, the first reaction to this problem is to secure the EJBs via some trivial means, perhaps by marking them accessible to all authenticated users. But, depending on the registry, "all authenticated users" might be every employee in a company. Some take this a step further and restrict access to members of a certain group that means roughly "anyone that can access this application." That's better, but it's usually not sufficient, as everyone that can access the application shouldn't necessarily be able to perform all the operations in the application. The right way to address this is to implement authorization checks in the EJBs. You might also consider implementing EJBs as local EJBs, making it impossible to connect to them remotely.

42. Do not rely on HTTP Session for user identity

Unfortunately, some applications that use their own security track the user's authentication session through the use of the HTTP Session. This is dangerous. The session is tracked via a session ID (on the URL or in a cookie). While the ID is randomly generated, it is still subject to replay attacks because it does not timeout (except when idle). On the other hand, the LTPA token, which is created when WAS security is used by the application, is designed to provide a stronger authentication token. In particular, LTPA tokens have limited lifetimes and use strong encryption. The security subsystem audits the receipt of potentially forged LTPA tokens.

43. Validate all user input

Cross-site scripting is a fairly insidious attack that takes advantage of the flexibility and power of Web browsers. Most Web browsers can interpret various scripting languages, such as JavaScript. The browser knows it is looking at something executable based upon a special sequence of escape characters. There lies the power, and dangerous flaw, of the Web browser security model.

Intruders take advantage of this hole by tricking a Web site into displaying in the browser a script that the intruder wants the site to execute. This is accomplished fairly easily on sites that allow for arbitrary user input. For example, if a site includes a form for inputting an address, a user can instead input JavaScript. When the site displays the address later, the Web browser will execute the script. That script, since it is running inside the Web browser from the site, has access to secure information, such as the user's cookies.

So far, this doesn't seem dangerous, but intruders take this one step further. They trick a user into going to a Web site and inputting the "evil script", perhaps by sending the user an innocent URL in an email. Now the intruder can use the user's identity to do harm. See Understanding Malicious Content Mitigation for Web Developers for more information.

This problem is actually a special case of a much larger class of problems related to user input validation. Whenever you allow a user to type in free text, ensure that the text doesn't contain special characters that could cause harm. For example, if a user were to type in a string that is used to search some index, it may be important to filter the string for improper wildcard characters that might cause unbounded searches. In the case of cross-site scripting prevention, we need to filter out the escape characters for the scripting languages supported by the browser. The message here is that all external input should be considered suspect and should be carefully validated.

44. Store information securely

To create a secure system, consider where information is stored or displayed. Sometimes, fairly serious security leaks can be introduced by accident. For example, be cautious about storing highly confidential information in the HTTP Session object, as this object may be serialized to the database. Thus, that information could be read from there. If an intruder has access to your database, or even raw machine-level access to the database volumes, he or she might be able to see information in the session. Needless to say, such an attack would take a high degree of skill.

45. Auditing and tracing can be sensitive